In the rapidly evolving world of artificial intelligence, neural networks stand out as a game-changer. These powerful algorithms mimic the human brain’s interconnected neuron structure, enabling machines to learn from vast amounts of data. As businesses and researchers increasingly rely on machine learning, mastering neural network programming becomes essential for anyone looking to tap into the potential of AI.

From image recognition to natural language processing, neural networks are transforming industries and driving innovation. However, diving into this complex field can be daunting for newcomers. Understanding the fundamentals of neural network programming opens doors to creating intelligent systems that can analyze patterns and make predictions with remarkable accuracy. With the right guidance, anyone can harness the capabilities of neural networks and contribute to the future of technology.

Table of Contents

ToggleOverview of Neural Networks Programming

Neural networks programming involves constructing algorithms that simulate the neural connections in the human brain. These algorithms enable devices to process data, recognize patterns, and make decisions. Various programming languages, such as Python, R, and Java, offer libraries and frameworks designed for neural network development, including TensorFlow, Keras, and PyTorch.

Key components of neural networks include:

- Neurons: Basic units that process inputs and generate outputs.

- Layers: Multiple neurons grouped together, including input, hidden, and output layers.

- Weights: Numerical values assigned to connections between neurons, determining the strength of inputs.

- Activation Functions: Mathematical functions that determine neuron output and help introduce non-linearity into the model.

Programming neural networks requires a solid grasp of concepts like supervised and unsupervised learning, loss functions, and backpropagation. This understanding enables developers to train models effectively, optimizing performance through techniques like gradient descent.

Real-world applications of neural networks span diverse domains, such as:

- Image Recognition: Systems that identify objects within images.

- Natural Language Processing: Models that understand and generate human language.

- Recommendation Systems: Algorithms that analyze user behavior to suggest products or content.

Engagement with neural networks programming opens opportunities for innovation and enhances problem-solving skills in the AI landscape. Each venture into this field contributes to advancements that impact technology and everyday life.

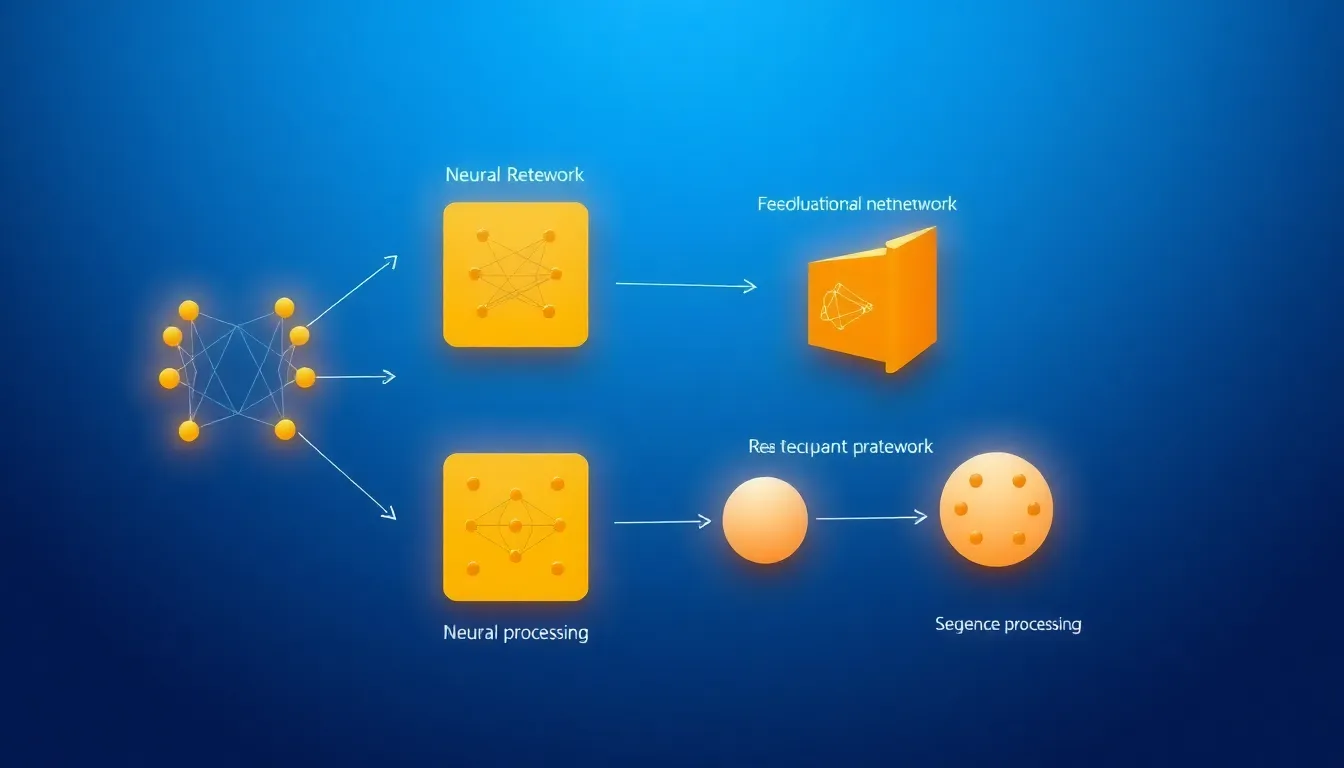

Types of Neural Networks

Various types of neural networks serve specific functions in artificial intelligence applications. Understanding these different architectures enhances one’s programming capabilities and optimizes model performance.

Feedforward Neural Networks

Feedforward neural networks represent the simplest type of neural network architecture. In these networks, data moves in one direction, from input to output, without any cycles or loops. Each neuron in one layer connects only to neurons in subsequent layers. They excel in tasks like pattern recognition and function approximation due to their straightforward design. Typical applications include image classification and basic predictive modeling.

Convolutional Neural Networks

Convolutional neural networks (CNNs) focus on processing data with grid-like topology, such as images. CNNs employ convolutional layers to apply filters or kernels that capture spatial hierarchies. The network reduces the dimensionality of the input while retaining essential features, enabling it to identify patterns and objects effectively. CNNs find extensive use in image and video recognition, as well as in medical image analysis, thanks to their ability to analyze visual data efficiently.

Recurrent Neural Networks

Recurrent neural networks (RNNs) incorporate cycles in their architecture, allowing them to maintain a form of memory. This design enables RNNs to process sequences of data, making them suitable for tasks involving time series or textual data. RNNs are particularly valuable in natural language processing applications, such as language translation and speech recognition. Variants like Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs) enhance RNN performance by addressing issues related to long-term dependencies in sequences.

Key Programming Languages and Frameworks

Programming neural networks requires familiarity with specific languages and frameworks that streamline the development process and enhance model performance. The following languages and frameworks are essential in the realm of neural network programming.

Python and TensorFlow

Python serves as the primary language for many neural network applications due to its simplicity and extensive libraries. TensorFlow, developed by Google, provides a comprehensive ecosystem for building and deploying machine learning models. With its flexibility, TensorFlow allows programmers to create complex neural networks with ease. Features like automatic differentiation and GPU support enhance computational speed and efficiency, making TensorFlow a fitting choice for both research and production environments.

R and Keras

R is notable for its statistical capabilities, making it widely used in data analysis and visualization. Keras, a high-level API for neural networks, runs on top of TensorFlow and simplifies model building. R users can leverage Keras to construct deep learning models efficiently while utilizing R’s rich statistical ecosystem. This combination fosters quick prototyping and experimentation with neural network architectures, particularly in academic and research settings.

C++ and PyTorch

C++ excels in performance-critical applications, offering fine-grained control over system resources. PyTorch, a framework developed by Facebook, enhances the speed and computational efficiency of neural networks. Its dynamic computation graph feature allows for real-time modifications, beneficial for debugging and experimentation. PyTorch’s integration with C++ enables developers to implement high-performance neural networks, making it a preferred choice for large-scale applications requiring optimized execution.

Essential Concepts in Neural Networks

Understanding essential concepts in neural networks lays the groundwork for effective programming. Two critical components of neural network architecture include activation functions and loss functions, alongside the technique of backpropagation.

Activation Functions

Activation functions introduce non-linearity into the neural network, enabling it to learn complex patterns. Common activation functions include:

- Sigmoid: Outputs values between 0 and 1, useful for binary classification.

- ReLU (Rectified Linear Unit): Outputs the input directly if positive, otherwise outputs zero, improving training speed and reducing the likelihood of vanishing gradients.

- Tanh: Outputs values between -1 and 1, enhancing performance in models with hidden layers.

Selecting an appropriate activation function significantly impacts the convergence and overall performance of the neural network.

Loss Functions

Loss functions measure the discrepancy between predicted outcomes and actual results, guiding model optimization. Key loss functions include:

- Mean Squared Error (MSE): Used for regression tasks, calculates the average of the squares of errors.

- Cross-Entropy Loss: Commonly utilized for classification problems, compares the predicted probability distribution with the true distribution.

- Hinge Loss: Primarily used in support vector machines, it focuses on maximizing the margin between classes.

Choosing the right loss function is essential for accurate model performance and effective training.

Backpropagation

Backpropagation is a crucial algorithm for training neural networks, allowing them to adjust weights to minimize loss. The process involves:

- Forward Pass: The input data passes through the network to generate predictions.

- Loss Calculation: The difference between predictions and actual values is computed using the loss function.

- Backward Pass: The algorithm calculates the gradient of the loss function concerning each weight using the chain rule, enabling adjustments.

Backpropagation fosters efficient learning by systematically refining weights, ultimately improving the model’s predictive capabilities.

Applications of Neural Networks Programming

Neural networks programming finds extensive applications across various domains, showcasing its versatility and efficacy in solving complex problems. Key areas of application include image recognition, natural language processing, and autonomous systems.

Image Recognition

Image recognition leverages neural networks, especially convolutional neural networks (CNNs), to analyze and interpret visual data. CNNs excel at identifying patterns and features within images, facilitating tasks such as object detection, facial recognition, and medical image analysis. For instance, systems like Google Photos utilize image recognition to categorize and tag images based on content, improving user experience. Deep learning libraries like TensorFlow and Keras provide robust tools for developers to build sophisticated image recognition models quickly.

Natural Language Processing

Natural language processing (NLP) harnesses the power of recurrent neural networks (RNNs) and their variants to analyze and generate human language. RNNs, particularly Long Short-Term Memory (LSTM) networks, effectively manage sequential data, making them suitable for applications like sentiment analysis, machine translation, and chatbots. For example, platforms like ChatGPT utilize NLP to understand user queries and generate relevant responses, transforming how users interact with technology. Leveraging frameworks like PyTorch can enhance performance, enabling developers to create highly responsive NLP applications.

Autonomous Systems

Autonomous systems integrate neural networks to process data from sensors and make real-time decisions. From self-driving cars to drones, neural networks facilitate navigation, obstacle detection, and path planning through reinforcement learning techniques. Tesla’s Autopilot system exemplifies this application, utilizing neural networks to process visual data from cameras for safer driving experiences. Programming autonomous systems with specialized libraries allows developers to fine-tune models for high performance and reliability in dynamic environments.

Mastering neural networks programming is essential for anyone looking to harness the power of artificial intelligence. This field offers endless opportunities for innovation and problem-solving across various industries. By understanding key concepts and utilizing the right tools, developers can create intelligent systems that accurately analyze data and make informed decisions.

As technology continues to evolve, the demand for skilled professionals in neural networks will only grow. Engaging with this complex yet rewarding discipline not only enhances individual capabilities but also contributes to advancements that shape the future of AI. Embracing the journey into neural networks programming can lead to exciting breakthroughs and transformative applications in everyday life.